Embedding organisms into Virtual Reality (VR) worlds to understand the evolution and mechanism of social interactions

Allowing real predators to hunt, and exerting selection pressure on, a virtual prey population

It is becoming possible to embed organisms into increasingly sophisticated virtual environments. Previously we developed a means to allow real predatory fish to interact with a virtual prey population, in:

Ioannou, C.C., Guttal, V. & Couzin, I.D. (2012) Predatory fish select for coordinated collective motion in virtual prey. Science 337(6099) 1212-1215.

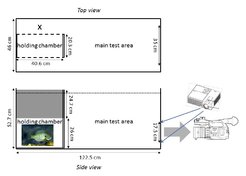

Movement in animal groups is highly varied and ranges from seemingly disordered motion in swarms to coordinated aligned motion in flocks and schools. These social interactions are often thought to reduce risk from predators, despite a lack of direct evidence. We investigated risk-related selection for collective motion by allowing real predators (bluegill sunfish) to hunt mobile virtual prey projected onto a thin translucent film just inside the tank, as illustrated.

The fish readily preyed on the virtual zooplankton prey. Embedding real predators into such a virtual environment allowed us to isolate the specific selection pressure of predation risk from the multitude of factors influencing the evolution of traits, such as the associated costs of the trait and taxonomic constraints.

Individual prey behavior was encoded by three traits: the strength of their behavioral tendencies to be attracted toward, orientate direction of travel with, or ignore near neighbors. Depending on these traits, prey exhibited a range of movement behaviors, including solitary randomwalk, formation and maintenance of aggregations, and coordinated polarized motion.

The predators exerted a strong selection pressure on this virtual population. Prey with a tendency to be attracted toward, and to align direction of travel with, near neighbors tended to form mobile coordinated groups and were rarely attacked. These results demonstrate that collective motion could evolve as a response to predation, without prey being able to detect and respond to predators.

A video of our experiment is shown below.

Embedding animals in reactive, 3D Virtual Reality (VR) environments

We have now extended this approach to allow both realtime feedback between the virtual environment and real fish by tracking the eyes of a focal fish in 3d space; for an example of how this technology works generally, see http://www.youtube.com/watch?v=Jd3-eiid-Uw, from around 2m 30s). The head tracking part that allows the illusion of the objects to ‘pop out’ of the screen is shown below. The illusion in this video is limited by the size of the display (when the objects reach the edges of the projection surface they obviously cannot be rendered appropriately). Consequently in our VR environments we are adopting hemispheric, or surround, projection.

Below is shown, in (A) and (B), a schematic diagram of the same method employed in the above video for creating objects that appear to be between the monitor/projected image and the fish. The white rectangle represents an area in the tank with the test fish shown in silhouette. The black rectangle represents what is shown on a computer monitor placed against the wall of the tank (i.e. not in the orientation shown here). In (A) a fish is presented stationary with circular and triangular projected objects; (B) when the fish moves, the images on the monitor are updated based on the new eye position. In practice updates are performed at a frame rate higher than the flicker fusion frequency (update frequency) of the fish eye, and objects are represented as projections of 3D objects. (C) a photograph of a real zebrafish is shown in the upper part of this panel, and the virtual zebrafish (still in development) in the lower part. We hope to have some videos of our new Fish VR system soon!

This technology will allow us to create an immersive photorealistic virtual world, which gives us much more control over the stimuli we present to individuals, including generating virtual conspecifics that appear to be in the water body with the fish, and that can interact with them in real time. This is a collaboration with Prof. Andrew Straw, and Dr. John Stowers and Max Hofbauer from LoopBio GMBH, are working with us on developing the hardware and software required for this exciting venture. An excellent introduction to this technology can be found here:

Stowers, JR., Fuhrmann, A., Hofbauer, M., Streinzer, M., Schmid, A., Dickinson, MH., Straw, AD. (2014). Reverse Engineering Animal Vision with Virtual Reality and Genetics. Computer 14(190):38–45

Including a video of the Straw Lab’s VR systems here.